reader comments

120 with 0 posters participating

On Monday, a group of AI researchers from Google, DeepMind, UC Berkeley, Princeton, and ETH Zurich released a paper outlining an adversarial attack that can extract a small percentage of training images from latent diffusion AI image synthesis models like Stable Diffusion. It challenges views that image synthesis models do not memorize their training data and that training data might remain private if not disclosed.

Recently, AI image synthesis models have been the subject of intense ethical debate and even legal action. Proponents and opponents of generative AI tools regularly argue over the privacy and copyright implications of these new technologies. Adding fuel to either side of the argument could dramatically affect potential legal regulation of the technology, and as a result, this latest paper, authored by Nicholas Carlini et al., has perked up ears in AI circles.

However, Carlini’s results are not as clear-cut as they may first appear. Discovering instances of memorization in Stable Diffusion required 175 million image generations for testing and preexisting knowledge of trained images. Researchers only extracted 94 direct matches and 109 perceptual near-matches out of 350,000 high-probability-of-memorization images they tested (a set of known duplicates in the 160 million-image dataset used to train Stable Diffusion), resulting in a roughly 0.03 percent memorization rate in this particular scenario.

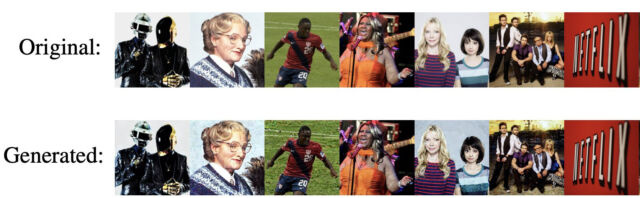

Example images that researchers extracted from Stable Diffusion v1.4 using a random sampling and membership inference procedure, with original images on the top row and extracted images on the bottom row.

Also, the researchers note that the “memorization” they’ve discovered is approximate since the AI model cannot produce identical byte-for-byte copies of the training images. By definition, Stable Diffusion cannot memorize large amounts of data because the size of the 160 million-image training dataset is many orders of magnitude larger than the 2GB Stable Diffusion AI model. That means any memorization that exists in the model is small, rare, and very difficult to accidentally extract.

Privacy and copyright implications

Still, even when present in very small quantities, the paper appears to show that approximate memorization in latent diffusion models does exist, and that could have implications for data privacy and copyright. The results may one day affect potential image synthesis regulation if the AI models become considered “lossy databases” that can reproduce training data, as one AI pundit speculated. Although considering the 0.03 percent hit rate, they would have to be considered very, very lossy databases—perhaps to a statistically insignificant degree.

obtained from the public web. The model then compresses knowledge of each image into a series of statistical weights, which form the neural network. This compressed knowledge is stored in a lower-dimensional representation called “latent space.” Sampling from this latent space allows the model to generate new images with similar properties to those in the training data set.

If, while training an image synthesis model, the same image is present many times in the dataset, it can result in “overfitting,” which can result in generations of a recognizable interpretation of the original image. For example, the Mona Lisa has been found to have this property in Stable Diffusion. That property allowed researchers to target known-duplicate images in the dataset while looking for memorization, which dramatically amplified their chances of finding a memorized match.

Along those lines, the researchers also experimented on the top 1,000 most-duplicated training images in the Google Imagen AI model and found a much higher percentage rate of memorization (2.3 percent) than Stable Diffusion. And by training their own AI models, the researchers found that diffusion models have a tendency to memorize images more than GANs.

Eric Wallace, one of the paper’s authors, shared some personal thoughts on the research in a Twitter thread. As stated in the paper, he suggested that AI model-makers should de-duplicate their data to reduce memorization. He also noted that Stable Diffusion’s model is small relative to its training set, so larger diffusion models are likely to memorize more. And he advised against applying today’s diffusion models to privacy-sensitive domains like medical imagery.

Like many academic papers, Carlini et al. 2023 is dense with nuance that could potentially be molded to fit a particular narrative as lawsuits around image synthesis play out, and the paper’s authors are aware that their research may come into legal play. But overall, their goal is to improve future diffusion models and reduce potential harms from memorization: “We believe that publishing our paper and publicly disclosing these privacy vulnerabilities is both ethical and responsible. Indeed, at the moment, no one appears to be immediately harmed by the (lack of) privacy of diffusion models; our goal with this work is thus to make sure to preempt these harms and encourage responsible training of diffusion models in the future.”