Even though concept of “deepfakes, ” potentially AI-generated synthetic imagery, might have been decried primarily in connection with involuntary depictions of people, the system is dangerous (and interesting) in other ways as well. Heating up liquids, researchers have shown that it enables you to manipulate satellite imagery to be able to real-looking — but thoroughly different fake — overhead atlases of cities.

The study, led by Bo Zhao from the University of Arizona , is not intended to fog bell anyone but rather to show results and opportunities involved in generating this rather infamous hi-tech to cartography. In fact their very own approach has as much in accordance with “style transfer” ideas — redrawing images in as impressionistic, crayon and irrelavent other fashions — than you will have with deepfakes as they are commonly appreciated.

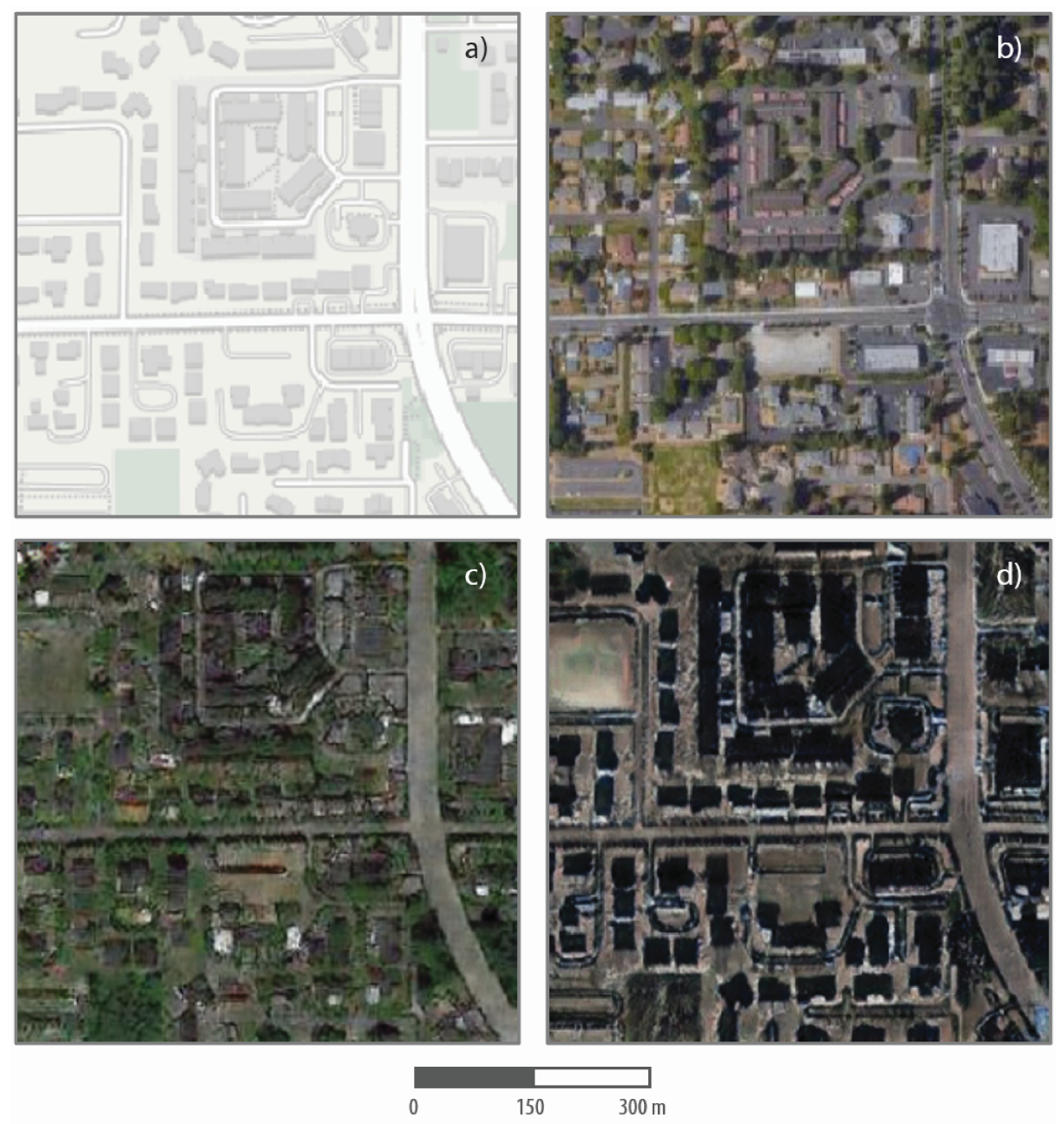

The team coached a machine learning system on satellite images of three different cities: Detroit, nearby Tacoma and Beijing. Each has its own distinctive look, such as a painter or large does. For instance, Seattle sometimes have larger overhanging trees and shrubbery and narrower streets, even as Beijing is more monochrome or — in the images for the study — the more substantial buildings cast long, black color shadows. The system learned to help associate details of a st. map (like Google in addition Apple’s) with those of the satellite view.

The resulting computer system learning agent, when administered a street map, comes a realistic-looking faux made image of what that discipline would look like if it got in any of those cities. Through the following image, the guide corresponds to the top right satellite television image of Tacoma, while the all the way down versions show how seek out look in Seattle and Beijing.

A close inspection will show that your particular fake maps aren’t sip sharp as the real specific, and there are probably some realistic inconsistencies like streets that particular go nowhere and the like. Yet somehow at a glance the Seattle and thus Beijing images are properly plausible.

One you use only has to think for several minutes to conceive of functions for fake maps like this, some sort of legitimate and otherwise. Generally the researchers suggest that the tip could be used to simulate ?mages of places for which n’t any satellite imagery is available — like one of these cities documented in days before such things turned out to be possible, or for a arranged expansion or zoning reverse. The system doesn’t have to imitate additional place altogether — it really is trained on a more densely populated part of the same new york city, or one with wider streets.

It would likely conceivably even be used, as this more whimsical project was , to make realistic-looking modern atlases from ancient hand-drawn men.

Should technology like this always bent to less useful purposes, the paper what’s more looks at ways to detect flavor simulated imagery using careful examination of colors and amenities.

The work difficulty the general assumption of the “absolute reliability of satellite footage or other geospatial modernized, ” said Zhao with a UW news article, and truly as with other media which experts claim kind thinking has to rely on the wayside as newbie threats appear. You can read the full paper at the journal Cartography together with Geographic Information Science .