reader comments

with 0 posters participating

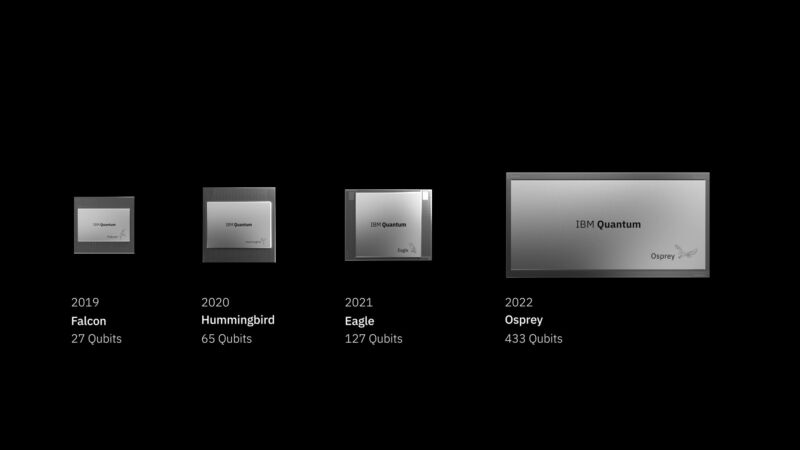

Today, IBM announced the latest generation of its family of avian-themed quantum processors, the Osprey. With more than three times the qubit count of its previous-generation Eagle processor, Osprey is the first to offer more than 400 qubits, which indicates the company remains on track to release the first 1,000-qubit processor next year.

Despite the high qubit count, there’s no need to rush out and re-encrypt all your sensitive data just yet. While the error rates of IBM’s qubits have steadily improved, they’ve still not reached the point where all 433 qubits in Osprey can be used in a single algorithm without a very high probability of an error. For now, IBM is emphasizing that Osprey is an indication that the company can stick to its aggressive road map for quantum computing, and that the work needed to make it useful is in progress.

On the road

To understand IBM’s announcement, it helps to understand the quantum computing market as a whole. There are now a lot of companies in the quantum computing market, from startups to large, established companies like IBM, Google, and Intel. They’ve bet on a variety of technologies, from trapped atoms to spare electrons to superconducting loops. Pretty much all of them agree that to reach quantum computing’s full potential, we need to get to where qubit counts are in the tens of thousands, and error rates on each individual qubit are low enough that these can be linked together into a smaller number of error-correcting qubits.

There’s also a general consensus that quantum computing can be useful for some specific problems much sooner. If qubit counts are sufficiently high and error rates get low enough, it’s possible that re-running specific calculations enough times to avoid an error will still get answers to problems that are difficult or impossible to achieve on typical computers.

The question is what to do while we’re working to get the error rate down. Since the probability of errors largely scales with qubit counts, adding more qubits to a calculation increases the likelihood that calculations will fail. I’ve had one executive at a trapped-ion qubit company tell me that it would be trivial for them to trap more ions and have a higher qubit count, but they don’t see the point—the increase in errors would make it difficult to complete any calculations. Or, to put it differently, to have a good probability of getting a result from a calculation, you’d have to use fewer qubits than are available.

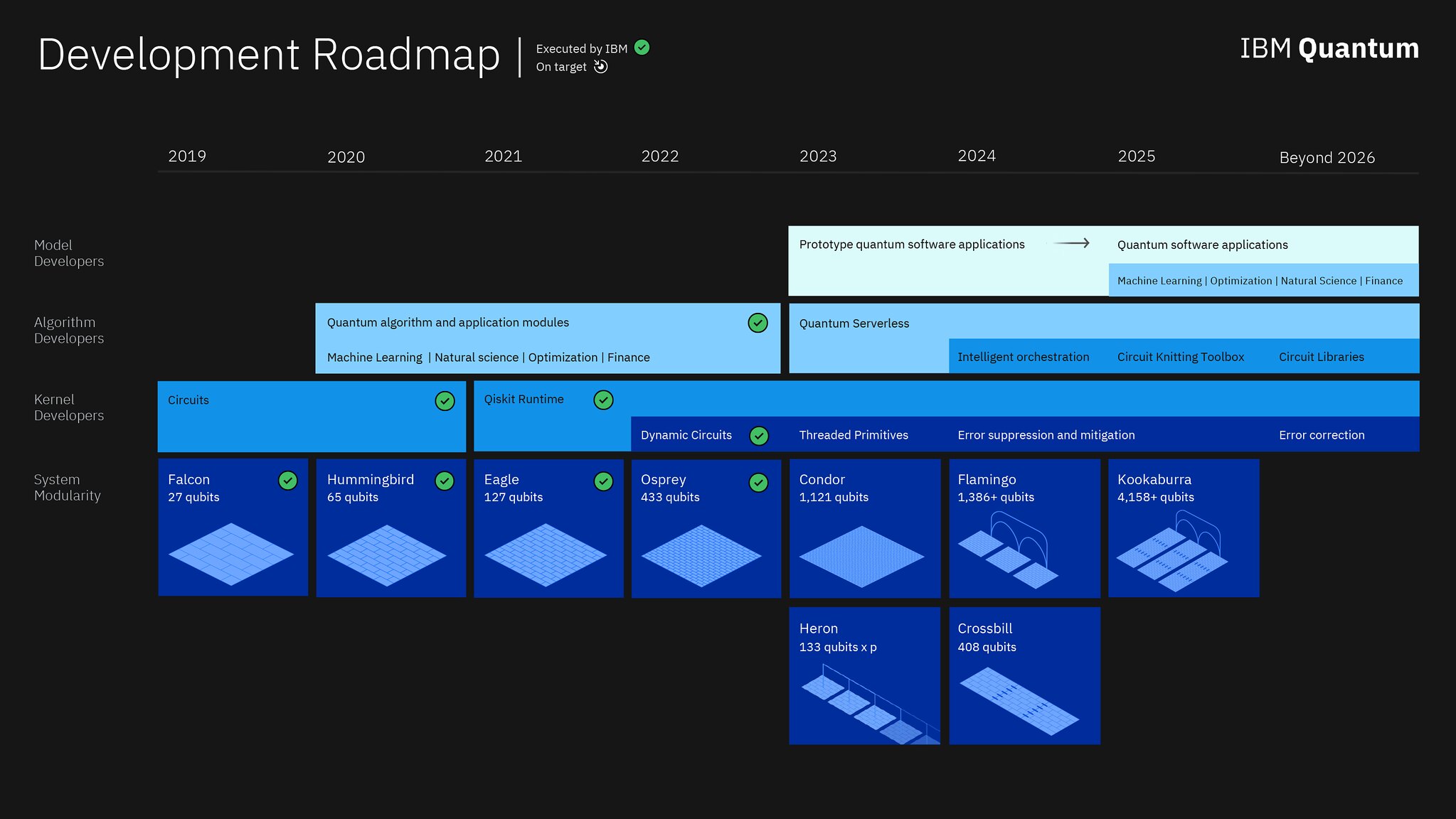

last year’s Eagle processor to be the first with more than 100 qubits, got Osprey’s qubit count right, and indicated that the company would be the first to clear 1,000 qubits with next year’s Condor. This year’s iteration on the road map extends the timeline and provides a lot of additional details on what the company is doing beyond raising qubit counts.

The most notable addition is that Condor won’t be the only hardware released next year; an additional processor called Heron is on the map that has a lower qubit count, but has the potential to be linked with other processors to form a multi-chip package (a step that one competitor in the space has already taken). When asked what the biggest barrier to scaling qubit count was, Chow answered that “it is size of the actual chip. Superconducting qubits are not the smallest structures—they’re actually pretty visible to your eye.” Fitting more of them onto a single chip creates challenges for the material structure of the chip, as well as the control and readout connections that need to be routed within it.

“We think that we are going to turn this crank one more time, using this basic single chip type of technology with Condor,” Chow told Ars. “But honestly, it’s impractical if you start to make single chips that are probably a large proportion of a wafer size.” So, while Heron will start out as a side branch of the development process, all the chips beyond Condor will have the capability to form links with additional processors.