reader comments

11 with 0 posters participating

On Wednesday, Nvidia announced a collaboration with Microsoft to build a “massive” cloud computer focused on AI. It will reportedly use tens of thousands of high-end Nvidia GPUs for applications like deep learning and large language models. The companies aim to make it one of the most powerful AI supercomputers in the world.

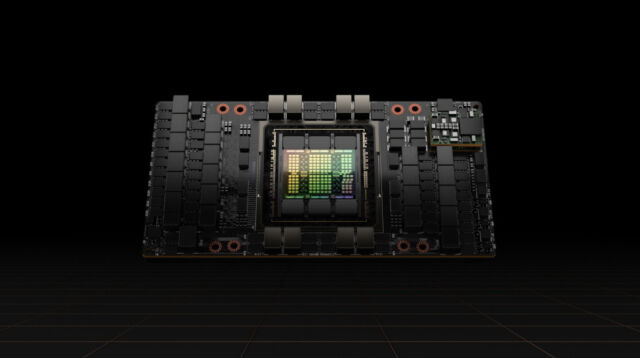

In turn, the new supercomputer will feature thousands of units of what is arguably the most powerful GPU in the world, the Hopper H100, which Nvidia launched in October. Nvidia will also provide its second most powerful GPU, the A100, and utilize its Quantum-2 InfiniBand networking platform, which can transfer data at 400 gigabits per second between servers, linking them together into a powerful cluster.

Meanwhile, Microsoft will contribute its Azure cloud infrastructure and ND- and NC-series virtual machines. Nvidia’s AI Enterprise platform will tie the whole thing together. The companies will also collaborate on DeepSpeed, Microsoft’s deep learning optimization software.

In a statement, Nvidia mentioned the applications the joint supercomputer might serve:

Stable Diffusion and DALL-E that can synthesize novel images on demand. Similar models have appeared that can create video, synthesize voices, and perform transcription, among other uses. As computational demand increases for generative AI, Nvidia and Microsoft intend to be there to meet it.

Once Nvidia and Microsoft’s cloud computer comes online, customers can deploy thousands of GPUs in a single cluster to “train even the most massive large language models, build the most complex recommender systems at scale, and enable generative AI at scale,” according to Nvidia.

The companies did not provide details on when the new supercomputer will be ready but mentioned that the announcement marks the beginning of a “multi-year collaboration.” It’s likely the cloud computer will scale up in capacity over time.