reader comments

26 with

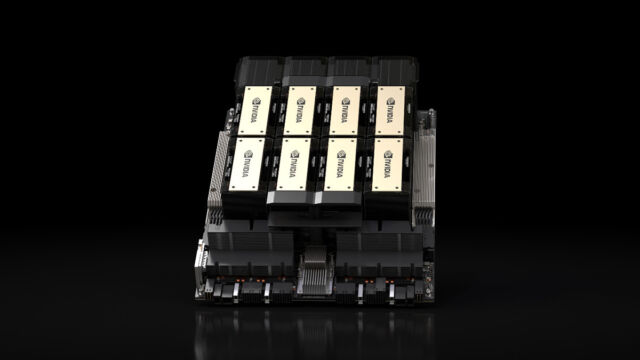

On Monday, Nvidia announced the HGX H200 Tensor Core GPU, which utilizes the Hopper architecture to accelerate AI applications. It’s a follow-up of the H100 GPU, released last year and previously Nvidia’s most powerful AI GPU chip. If widely deployed, it could lead to far more powerful AI models—and faster response times for existing ones like ChatGPT—in the near future.

According to experts, lack of computing power (often called “compute”) has been a major bottleneck of AI progress this past year, hindering deployments of existing AI models and slowing the development of new ones. Shortages of powerful GPUs that accelerate AI models are largely to blame. One way to alleviate the compute bottleneck is to make more chips, but you can also make AI chips more powerful. That second approach may make the H200 an attractive product for cloud providers.

What’s the H200 good for? Despite the “G” in the “GPU” name, data center GPUs like this typically aren’t for graphics. GPUs are ideal for AI applications because they perform vast numbers of parallel matrix multiplications, which are necessary for neural networks to function. They are essential in the training portion of building an AI model and the “inference” portion, where people feed inputs into an AI model and it returns results.

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory,” said Ian Buck, vice president of hyperscale and HPC at Nvidia in a news release. “With Nvidia H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

For example, OpenAI has repeatedly said it’s low on GPU resources, and that causes slowdowns with ChatGPT. The company must rely on rate limiting to provide any service at all. Hypothetically, using the H200 might give the existing AI language models that run ChatGPT more breathing room to serve more customers.

According to Nvidia, the H200 is the first GPU to offer HBM3e memory. Thanks to HBM3e, the H200 offers 141GB of memory and 4.8 terabytes per second bandwidth, which Nvidia says is 2.4 times the memory bandwidth of the Nvidia A100 released in 2020. (Despite the A100’s age, it’s still in high demand due to shortages of more powerful chips.)

Nvidia will make the H200 available in several form factors. This includes Nvidia HGX H200 server boards in four- and eight-way configurations, compatible with both hardware and software of HGX H100 systems. It will also be available in the Nvidia GH200 Grace Hopper Superchip, which combines a CPU and GPU into one package for even more AI oomph (that’s a technical term).

Amazon Web Services, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will be the first cloud service providers to deploy H200-based instances starting next year, and Nvidia says the H200 will be available “from global system manufacturers and cloud service providers” starting in Q2 2024.

Meanwhile, Nvidia has been playing a cat-and-mouse game with the US government over export restrictions for its powerful GPUs that limit sales to China. Last year, the US Department of Commerce announced restrictions intended to “keep advanced technologies out of the wrong hands” like China and Russia. Nvidia responded by creating new chips to get around those barriers, but the US recently banned those, too.

Last week, Reuters reported that Nvidia is at it again, introducing three new scaled-back AI chips (the HGX H20, L20 PCIe, and L2 PCIe) for the Chinese market, which represents a quarter of Nvidia’s data center chip revenue. Two of the chips fall below US restrictions, and a third is in a “gray zone” that might be permissible with a license. Expect to see more back-and-forth moves between the US and Nvidia in the months ahead.