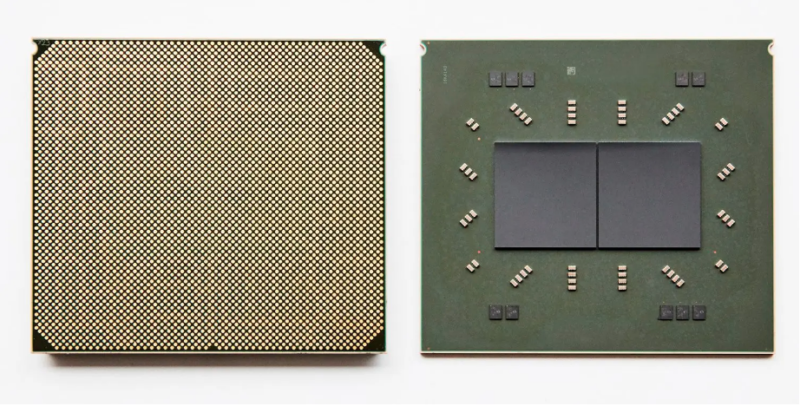

Enlarge / Each Telum package consists of two 7nm, eight-core / sixteen-thread processors running at a base clock speed above 5GHz. A typical system will have sixteen of these chips in total, arranged in four-socket “drawers.”

Enlarge / Each Telum package consists of two 7nm, eight-core / sixteen-thread processors running at a base clock speed above 5GHz. A typical system will have sixteen of these chips in total, arranged in four-socket “drawers.”

reader comments

11 with 9 posters participating

From the perspective of a traditional x86 computing enthusiast—or professional—mainframes are strange, archaic beasts. They’re physically enormous, power-hungry, and expensive by comparison to more traditional data-center gear, generally offering less compute per rack at a higher cost.

This raises the question, “Why keep using mainframes, then?” Once you hand-wave the cynical answers that boil down to “because that’s how we’ve always done it,” the practical answers largely come down to reliability and consistency. As AnandTech’s Ian Cutress points out in a speculative piece focused on the Telum’s redesigned cache, “downtime of these [IBM Z] systems is measured in milliseconds per year.” (If true, that’s at least seven nines.)

IBM’s own announcement of the Telum hints at just how different mainframe and commodity computing’s priorities are. It casually describes Telum’s memory interface as “capable of tolerating complete channel or DIMM failures, and designed to transparently recover data without impact to response time.”

When you pull a DIMM from a live, running x86 server, that server does not “transparently recover data”—it simply crashes.

IBM Z-series architecture

Telum is designed to be something of a one-chip-to-rule-them-all for mainframes, replacing a much more heterogeneous setup in earlier IBM mainframes.

The 14 nm IBM z15 CPU which Telum is replacing features five total processors—two pairs of 12-core Compute Processors and one System Controller. Each Compute Processor hosts 256MiB of L3 cache shared between its 12 cores, while the System Controller hosts a whopping 960MiB of L4 cache shared between the four Compute Processors.

detail on Telum’s cache mechanisms. He eventually sums them up by answering “How is this possible?” with a simple “magic.”

AI inference acceleration

Telum also introduces a 6TFLOPS on-die inference accelerator. It’s intended to be used for—among other things—real-time fraud detection during financial transactions (as opposed to shortly after the transaction).

In the quest for maximum performance and minimal latency, IBM threads several needles. The new inference accelerator is placed on-die, which allows for lower latency interconnects between the accelerator and CPU cores—but it’s not built into the cores themselves, a la Intel’s AVX-512 instruction set.

The problem with in-core inference acceleration like Intel’s is that it typically limits the AI processing power available to any single core. A Xeon core running an AVX-512 instruction only has the hardware inside its own core available to it, meaning larger inference jobs must be split among multiple Xeon cores to extract the full performance available.

Telum’s accelerator is on-die but off-core. This allows a single core to run inference workloads with the might of the entire on-die accelerator, not just the portion built into itself.

Listing image by IBM