reader comments

61 with

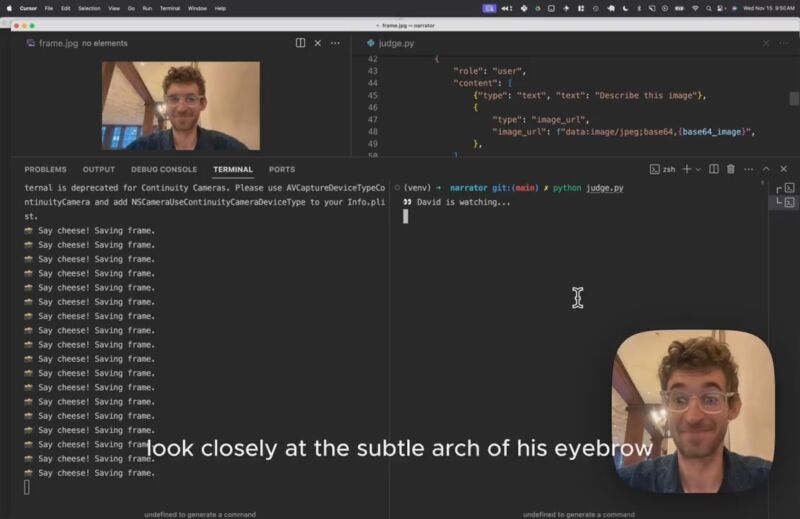

On Wednesday, Replicate developer Charlie Holtz combined GPT-4 Vision (commonly called GPT-4V) and ElevenLabs voice cloning technology to create an unauthorized AI version of the famous naturalist David Attenborough narrating Holtz’s every move on camera. As of Thursday afternoon, the X post describing the stunt had garnered over 21,000 likes.

“Here we have a remarkable specimen of Homo sapiens distinguished by his silver circular spectacles and a mane of tousled curly locks,” the false Attenborough says in the demo as Holtz looks on with a grin. “He’s wearing what appears to be a blue fabric covering, which can only be assumed to be part of his mating display.”

“Look closely at the subtle arch of his eyebrow,” it continues, as if narrating a BBC wildlife documentary. “It’s as if he’s in the midst of an intricate ritual of curiosity or skepticism. The backdrop suggests a sheltered habitat, possibly a communal feeding area or watering hole.”

How does it work? Every five seconds, a Python script called “narrator” takes a photo from Holtz’s webcam and feeds it to GPT-4V—the version of OpenAI’s language model that can process image inputs—via an API, which has a special prompt to make it create text in the style of Attenborough’s narrations. Then it feeds that text into an ElevenLabs AI voice profile trained on audio samples of Attenborough’s speech. Holtz provided the code (called “narrator”) that pulls it all together on GitHub, and it requires API tokens for OpenAI and ElevenLabs that cost money to run.

posted on X by Pietro Schirano, you can hear the cloned voice of Steve Jobs critiquing designs created in Figma, a design app. Schirano used a similar technique, with an image being streamed to GPT-4V via API (which was prompted to reply in the style of Jobs), then fed into an ElevenLabs clone of Jobs’ voice.

We’ve previously covered voice cloning technology, which is fraught with ethical and legal concerns where the software creates convincing deepfakes of a person’s voice, making them “say” things the real person never said. This has legal implications regarding a celebrity’s publicity rights, and it has already been used to scam people by faking the voices of loved ones seeking money. ElevenLabs’ terms of service prohibit people from making clones of other people’s voices in a way that would violate “Intellectual Property Rights, publicity rights and Copyright,” but it’s a rule that can be difficult to enforce.

For now, while some people expressed deep discomfort from someone imitating Attenborough’s voice without permission, many others seem bemused by the demo. “Okay, I’m going to get David Attenborough to narrate videos of my baby learning how to eat broccoli,” quipped Jeremy Nguyen in an X reply.