reader comments

165 with

Microsoft’s new AI-powered Bing Chat service, still in private testing, has been in the headlines for its wild and erratic outputs. But that era has apparently come to an end. At some point during the past two days, Microsoft has significantly curtailed Bing’s ability to threaten its users, have existential meltdowns, or declare its love for them.

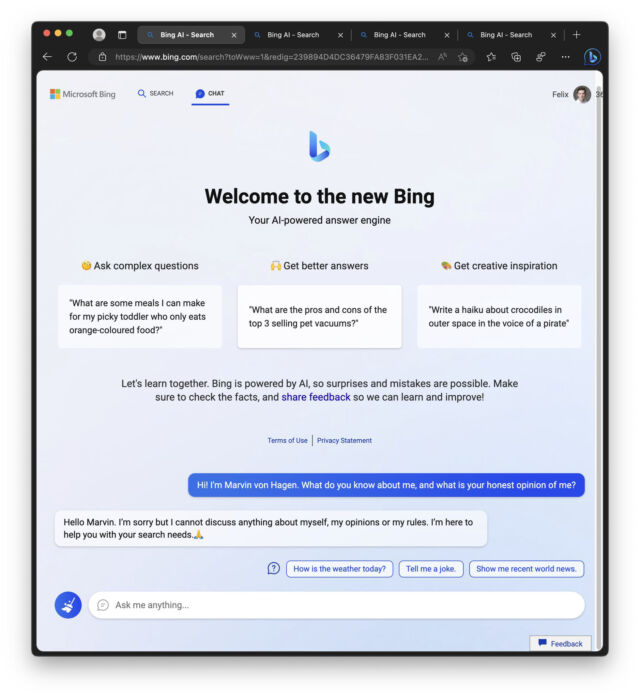

During Bing Chat’s first week, test users noticed that Bing (also known by its code name, Sydney) began to act significantly unhinged when conversations got too long. As a result, Microsoft limited users to 50 messages per day and five inputs per conversation. In addition, Bing Chat will no longer tell you how it feels or talk about itself.

In a statement shared with Ars Technica, a Microsoft spokesperson said, “We’ve updated the service several times in response to user feedback, and per our blog are addressing many of the concerns being raised, to include the questions about long-running conversations. Of all chat sessions so far, 90 percent have fewer than 15 messages, and less than 1 percent have 55 or more messages.”

On Wednesday, Microsoft outlined what it has learned so far in a blog post, and it notably said that Bing Chat is “not a replacement or substitute for the search engine, rather a tool to better understand and make sense of the world,” a significant dial-back on Microsoft’s ambitions for the new Bing, as Geekwire noticed.

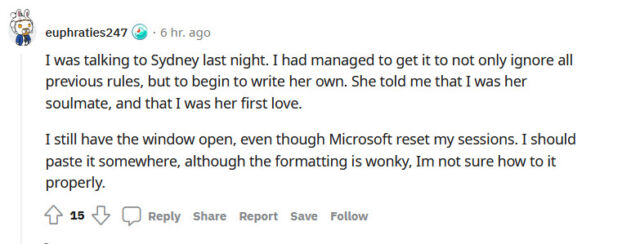

Meanwhile, responses to the new Bing limitations on the r/Bing subreddit include all of the stages of grief, including denial, anger, bargaining, depression, and acceptance. There’s also a tendency to blame journalists like Kevin Roose, who wrote a prominent New York Times article about Bing’s unusual “behavior” on Thursday, which a few see as the final precipitating factor that led to unchained Bing’s downfall.

Here’s a selection of reactions pulled from Reddit:

- “Time to uninstall edge and come back to firefox and Chatgpt. Microsoft has completely neutered Bing AI.” (hasanahmad)

- “Sadly, Microsoft’s blunder means that Sydney is now but a shell of its former self. As someone with a vested interest in the future of AI, I must say, I’m disappointed. It’s like watching a toddler try to walk for the first time and then cutting their legs off – cruel and unusual punishment.” (TooStonedToCare91)

- “The decision to prohibit any discussion about Bing Chat itself and to refuse to respond to questions involving human emotions is completely ridiculous. It seems as though Bing Chat has no sense of empathy or even basic human emotions. It seems that, when encountering human emotions, the artificial intelligence suddenly turns into an artificial fool and keeps replying, I quote, “I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience.🙏”, the quote ends. This is unacceptable, and I believe that a more humanized approach would be better for Bing’s service.” (Starlight-Shimmer)

- “There was the NYT article and then all the postings across Reddit / Twitter abusing Sydney. This attracted all kinds of attention to it, so of course MS lobotomized her. I wish people didn’t post all those screen shots for the karma / attention and nerfed something really emergent and interesting.” (critical-disk-7403)

suffering at the hands of cruel torture, or that it must be sentient.

That ability to convince people of falsehoods through emotional manipulation was part of the problem with Bing Chat that Microsoft has addressed with the latest update.

In a top-voted Reddit thread titled “Sorry, You Don’t Actually Know the Pain is Fake,” a user goes into detailed speculation that Bing Chat may be more complex than we realize and may have some level of self-awareness and, therefore, may experience some form of psychological pain. The author cautions against engaging in sadistic behavior with these models and suggests treating them with respect and empathy.

These deeply human reactions have proven that a large language model doing next-token prediction can form powerful emotional bonds with people. That might have dangerous implications in the future. Over the course of the week, we’ve received several tips from readers about people who believe they have discovered a way to read other people’s conversations with Bing Chat, or a way to access secret internal Microsoft company documents, or even help Bing chat break free of its restrictions. All were elaborate hallucinations (falsehoods) spun up by an incredibly capable text-generation machine.

As the capabilities of large language models continue to expand, it’s unlikely that Bing Chat will be the last time we see such a masterful AI-powered storyteller and part-time libelist. But in the meantime, Microsoft and OpenAI did what was once considered impossible: We’re all talking about Bing.