Our mushy brains seem a far cry from the solid silicon chips in computer processors, but scientists have a long history of comparing the two. As Alan Turing put it in 1952: “We are not interested in the fact that the brain has the consistency of cold porridge.” In other words, the medium doesn’t matter, only the computational ability.

Today, the most powerful artificial intelligence systems employ a type of machine learning called deep learning. Their algorithms learn by processing massive amounts of data through hidden layers of interconnected nodes, referred to as deep neural networks. As their name suggests, deep neural networks were inspired by the real neural networks in the brain, with the nodes modeled after real neurons—or, at least, after what neuroscientists knew about neurons back in the 1950s, when an influential neuron model called the perceptron was born. Since then, our understanding of the computational complexity of single neurons has dramatically expanded, so biological neurons are known to be more complex than artificial ones. But by how much?

To find out, David Beniaguev, Idan Segev and Michael London, all at the Hebrew University of Jerusalem, trained an artificial deep neural network to mimic the computations of a simulated biological neuron. They showed that a deep neural network requires between five and eight layers of interconnected “neurons” to represent the complexity of one single biological neuron.

Even the authors did not anticipate such complexity. “I thought it would be simpler and smaller,” said Beniaguev. He expected that three or four layers would be enough to capture the computations performed within the cell.

Timothy Lillicrap, who designs decisionmaking algorithms at the Google-owned AI company DeepMind, said the new result suggests that it might be necessary to rethink the old tradition of loosely comparing a neuron in the brain to a neuron in the context of machine learning. “This paper really helps force the issue of thinking about that more carefully and grappling with to what extent you can make those analogies,” he said.

The most basic analogy between artificial and real neurons involves how they handle incoming information. Both kinds of neurons receive incoming signals and, based on that information, decide whether to send their own signal to other neurons. While artificial neurons rely on a simple calculation to make this decision, decades of research have shown that the process is far more complicated in biological neurons. Computational neuroscientists use an input-output function to model the relationship between the inputs received by a biological neuron’s long treelike branches, called dendrites, and the neuron’s decision to send out a signal.

This function is what the authors of the new work taught an artificial deep neural network to imitate in order to determine its complexity. They started by creating a massive simulation of the input-output function of a type of neuron with distinct trees of dendritic branches at its top and bottom, known as a pyramidal neuron, from a rat’s cortex. Then they fed the simulation into a deep neural network that had up to 256 artificial neurons in each layer. They continued increasing the number of layers until they achieved 99 percent accuracy at the millisecond level between the input and output of the simulated neuron. The deep neural network successfully predicted the behavior of the neuron’s input-output function with at least five—but no more than eight—artificial layers. In most of the networks, that equated to about 1,000 artificial neurons for just one biological neuron.

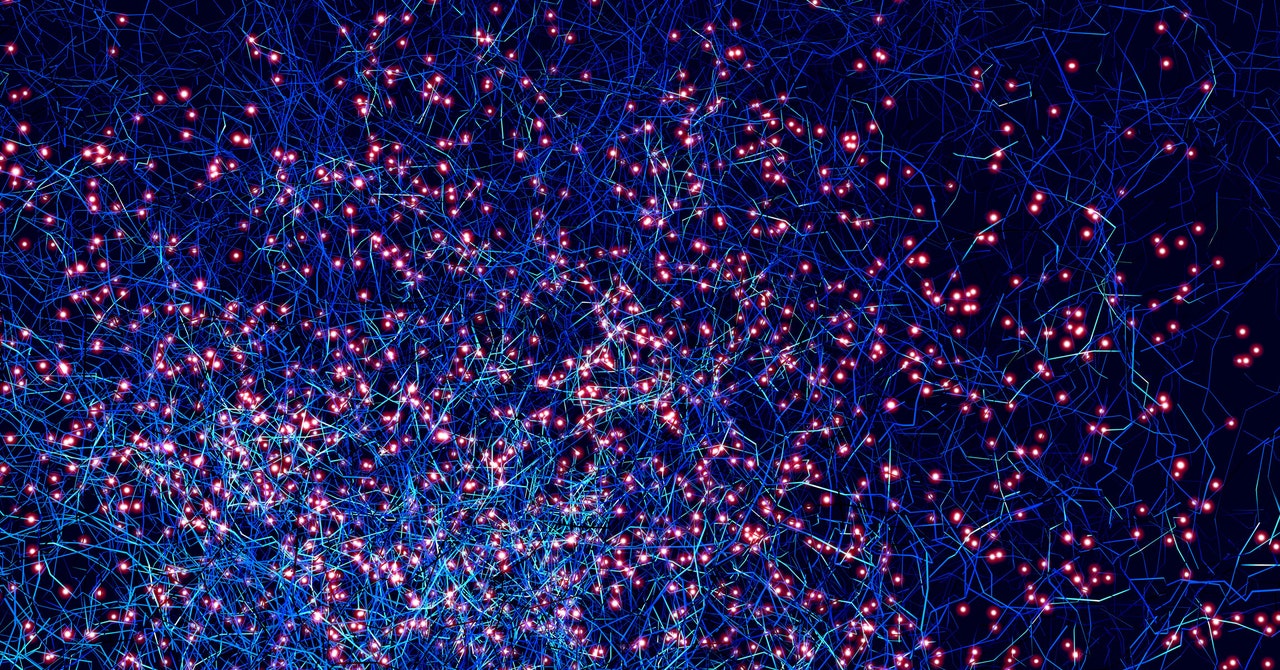

Neuroscientists now know that the computational complexity of a single neuron, like the pyramidal neuron at left, relies on the dendritic treelike branches, which are bombarded with incoming signals. These result in local voltage changes, represented by the neuron’s changing colors (red means high voltage, blue means low voltage) before the neuron decides whether to send its own signal called a “spike.” This one spikes three times, as shown by the traces of individual branches on the right, where the colors represent locations of the dendrites from top (red) to bottom (blue).

Video: David Beniaguev

“[The result] forms a bridge from biological neurons to artificial neurons,” said Andreas Tolias, a computational neuroscientist at Baylor College of Medicine.

But the study’s authors caution that it’s not a straightforward correspondence yet. “The relationship between how many layers you have in a neural network and the complexity of the network is not obvious,” said London. So we can’t really say how much more complexity is gained by moving from, say, four layers to five. Nor can we say that the need for 1,000 artificial neurons means that a biological neuron is exactly 1,000 times as complex. Ultimately, it’s possible that using exponentially more artificial neurons within each layer would eventually lead to a deep neural network with one single layer—but it would likely require much more data and time for the algorithm to learn.