It’s probably for the best that human babies can’t run 9 miles per hour shortly after birth. It takes years of practice to crawl and then walk well, during which time mothers don’t have to worry about their children legging it out of the county. Roboticists don’t have that kind of time to spare, however, so they’re developing ways for machines to learn to move through trial and error—just like babies, only way, way faster.

Video: MIT

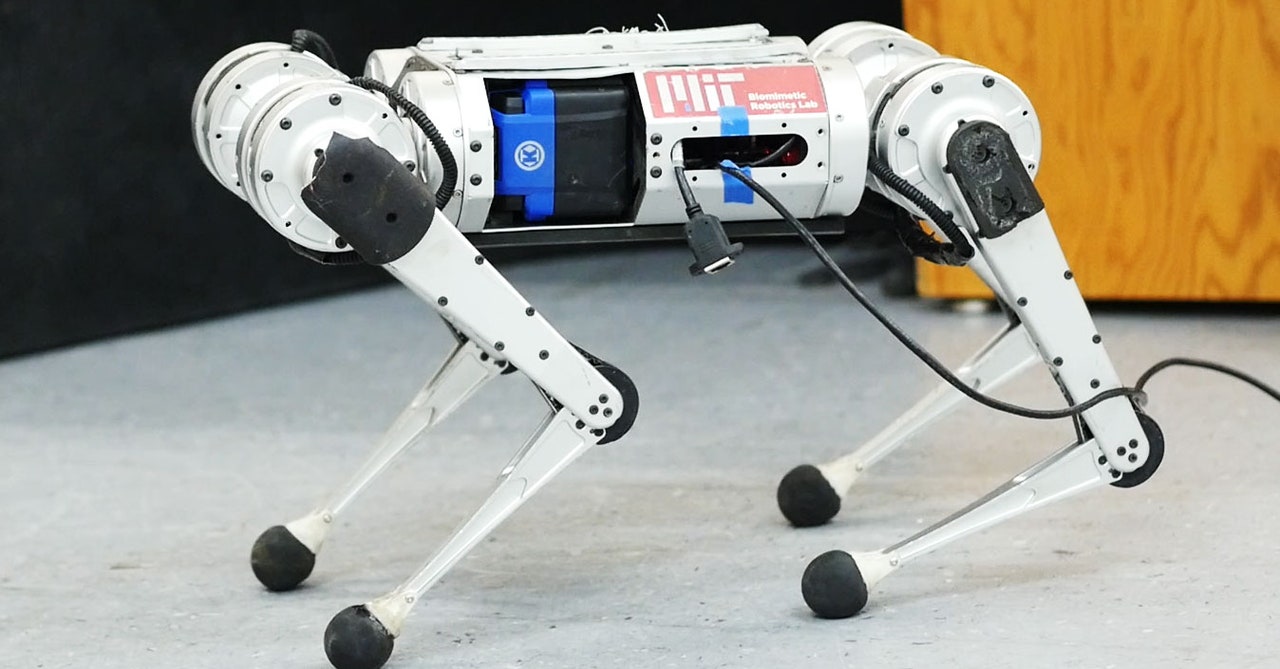

Yeah, OK, what you’re looking at in the video above isn’t the most graceful locomotion. But MIT scientists announced last week that they got this research platform, a four-legged machine known as Mini Cheetah, to hit its fastest speed ever—nearly 13 feet per second, or 9 miles per hour—not by meticulously hand-coding its movements line by line, but by encouraging digital versions of the machine to experiment with running in a simulated world. What the system landed on is … unconventional. But the researchers were able to port what the virtual robot learned into this physical machine that could then bolt across all kinds of terrain without falling on its, um, face.

This technique is known as reinforcement learning. Think of it like dangling a toy in front of a baby to encourage it to crawl, only here the researchers simulated 4,000 versions of the robot and encouraged them to first learn to walk, then to run in multiple directions. The digital Mini Cheetahs took trial runs on unique simulated surfaces that had been programmed to have certain levels of characteristics, like friction and softness. This prepared the virtual robots for the range of surfaces they’d need to tackle in the real world, like grass, pavement, ice, and gravel.

The thousands of simulated robots could try all kinds of different ways of moving their limbs. Techniques that resulted in speediness were rewarded, while bad ones were tossed out. Over time, the virtual robots learned through trial and error, like a human does. But because this was happening digitally, the robots were able to learn way faster: Just three hours of practice time in the simulation equaled 100 hours in the real world.

Video: MIT

Then the researchers ported what the digital robots had learned about running on different surfaces into the real-life Mini Cheetah. The robot doesn’t have a camera, so it can’t see its surroundings in order to adjust its gait. Instead, it calculates its balance and keeps track of how its footsteps are propelling it forward. For example, if it’s walking on grass, it can refer back to its digital training on a surface with the same friction and softness as the actual turf. “Rather than a human prescribing exactly how the robot should walk, the robot learns from a simulator and experience to essentially achieve the ability to run both forward and backward, and turn—very, very quickly,” says Gabriel Margolis, an AI researcher at MIT who codeveloped the system.

The result isn’t especially elegant, but it is stable and fast, and the robot largely did it on its own. Mini Cheetah can scramble down a hill as gravel shifts underfoot and keep its balance on patches of ice. It can recover from a stumble and even adapt to continue moving if one of its legs is disabled.

To be clear, this isn’t necessarily the safest or most energy efficient way for the robot to run—the team was only optimizing for speed. But it’s a radical departure from how cautiously other robots have to move through the world. “Most of these robots are really slow,” says Pulkit Agrawal, an AI researcher at MIT who codeveloped the system. “They don’t walk fast, or they can’t run. And even when they’re walking, they’re just walking straight. Or they can turn, but they can’t do agile behaviors like spinning at fast speeds.”